What is Algorithms:

An algorithm is a finite sequence of well-defined, step-by-step instructions or a set of rules that, when followed, can solve a specific problem or perform a particular task. Algorithms are fundamental in computer science and mathematics and serve as the basis for designing software, solving computational problems, and automating processes. They provide a systematic and structured approach to problem-solving, enabling efficient and reliable execution of tasks. Algorithms can range from simple and straightforward to complex and sophisticated, depending on the problem they are designed to address.

Table of Contents

Qualities of a Good Algorithm

- Input and output should be defined precisely.

- Each step in the algorithm should be unambiguous, which means that its instructions should be clear and straightforward.

- Algorithms should have a limited number of instructions, i.e., the instructions should be countable.

- Algorithms should be most effective among many different ways to solve a problem.

- An algorithm shouldn’t include computer code. An algorithm must be language-independent, which means that its instructions can be implemented in any language and produce the same results.

Need of Algorithm

- To understand the basic idea of the problem.

- To find an approach to solve the problem.

- To understand the basic principles of designing the algorithms.

- To compare the performance of the algorithm with respect to other techniques.

- To improve the efficiency of existing techniques.

- The Algorithm gives a clear description of requirements and goal of the problem to the designer.

- To measure the behaviour (or performance) of the methods in all cases (best cases, worst cases, average cases) and analyze the complexity (time and space) of the problems concerning input size without implementing and running it; it will reduce the cost of design. With the help of an algorithm, we can also identify the resources (memory, input-output) cycles required by the algorithm.

Difference between Algorithm and the Pseudo-code

- An algorithm is simply a problem-solving process, which is used not only in computer science to write a program but also in our day to day life. It is nothing but a series of instructions to solve a problem or get to the problem’s solution. It not only helps in simplifying the problem but also to have a better understanding of it.

- However, Pseudo-code is a way of writing an algorithm. Programmers can use informal, simple language to write pseudo-code without following any strict syntax. It encompasses semi-mathematical statements.

Types of Algorithms

There are several types of algorithms available. Some important algorithms are:

- Brute Force Algorithm: It is the simplest approach for a problem. A brute force algorithm is the first approach that comes to finding when we see a problem.

- Recursive Algorithm: A recursive algorithm is based on recursion. In this case, a problem is broken into several sub-parts and called the same function again and again.

- Backtracking Algorithm: The backtracking algorithm basically builds the solution by searching among all possible solutions. Using this algorithm, we keep on building the solution following criteria. Whenever a solution fails we trace back to the failure point and build on the next solution and continue this process till we find the solution or all possible solutions are looked after.

- Searching Algorithm: Searching algorithms are the ones that are used for searching elements or groups of elements from a particular data structure. They can be of different types based on their approach or the data structure in which the element should be found.

- Sorting Algorithm: Sorting is arranging a group of data in a particular manner according to the requirement. The algorithms which help in performing this function are called sorting algorithms. Generally sorting algorithms are used to sort groups of data in an increasing or decreasing manner.

- Hashing Algorithm: Hashing algorithms work similarly to the searching algorithm. But they contain an index with a key ID. In hashing, a key is assigned to specific data.

- Divide and Conquer Algorithm: This algorithm breaks a problem into sub-problems, solves a single sub-problem and merges the solutions together to get the final solution. It consists of the following three steps divide, solve and combine.

- Greedy Algorithm: In this type of algorithm the solution is built part by part. The solution of the next part is built based on the immediate benefit of the next part. The one solution giving the most benefit will be chosen as the solution for the next part.

- Dynamic Programming Algorithm: This algorithm uses the concept of using the already found solution to avoid repetitive calculation of the same part of the problem. It divides the problem into smaller overlapping subproblems and solves them.

- Randomized Algorithm: In the randomized algorithm we use a random number so it gives immediate benefit. The random number helps in deciding the expected outcome.

Best Example for learn

Algorithm 1: Add two numbers entered by the user

Step 1: Start

Step 2: Declare variables num1, num2 and sum.

Step 3: Read values num1 and num2.

Step 4: Add num1 and num2 and assign the result to sum. sum←num1+num2

Step 5: Display: sum

Step 6: Stop

Masuma

Algorithm 2: Find the largest number among three numbers

Step 1: Start

Step 2: Declare variables a, b and c.

Step 3: Read variables a, b and c.

Step 4:

If a > b

If a > c

Display: a is the largest number.

Else

Display: c is the largest number.

Else

If b > c

Display: b is the largest number.

Else

Display: c is the greatest number.

Masuma

Step 5: Stop

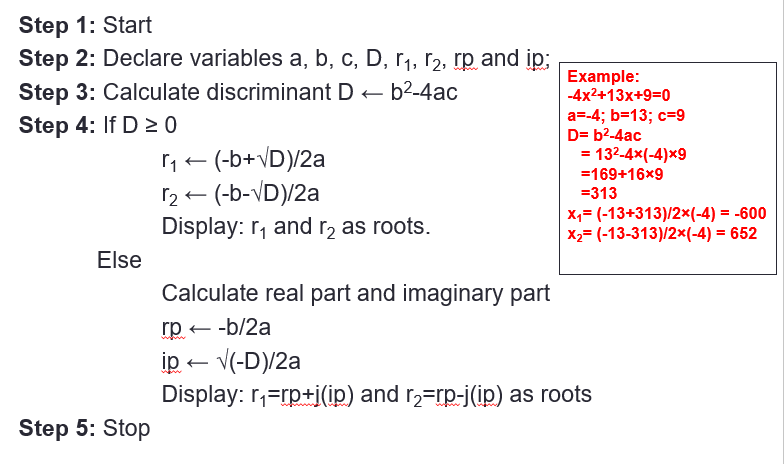

Algorithm 3: Find Roots of a Quadratic Equation ax2 + bx + c = 0

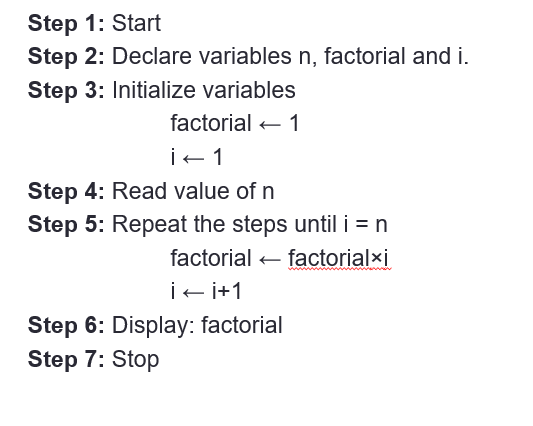

Algorithm 4: Find the factorial of a number

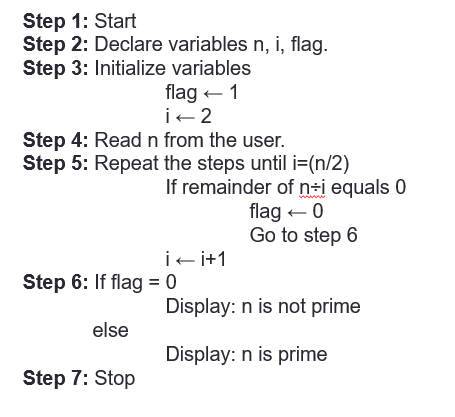

Algorithm 5: Check whether a number is prime or not

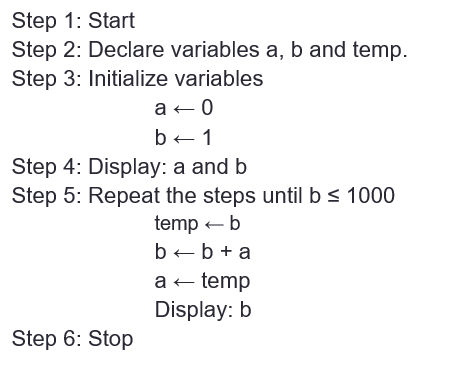

Algorithm 6: Find the Fibonacci series till the term less than 1000

Analysis of an Algorithm

The algorithm can be examined at two levels; before and after it is created. The two algorithm analyses are as follows:

•Priori Analysis: This refers to the theoretical analysis of an algorithm performed before implementing the algorithm considering various factors such as processor speed, which does not affect the implementation.

•Posterior Analysis: This refers to a practical analysis of an algorithm performed after implementing the algorithm. This analysis determines how much running time and space is required. A numbers of important factors like user-friendliness, modularity, simplicity, maintainability, etc. are used to design and analyse an algorithm.

Factors of an Algorithm

The following are the factors to consider when designing an algorithm:

•Modularity: Modularity is a feature of an algorithm by which you can break a given problem down into small-small modules or small-small steps, which is a basic definition of an algorithm.

•Correctness: When the given inputs produce the desired output it indicates that the algorithm was designed correctly.

•Maintainability: It means that the algorithm should be designed in a straightforward, structured way so that when you redefine the algorithm, no significant changes are made to the algorithm.

•Functionality: It takes into account various logical steps to solve a real-world problem.

•Robustness: Robustness refers to an algorithm’s ability to define your problem clearly.

•User-friendly: If the algorithm is difficult to understand, the designer will not explain it to the programmer.

•Simplicity: If an algorithm is simple, it is simple to understand.Extensibility: An algorithm should be extensible, if another algorithm designer or programmer wants to use it.

Performance Analysis of an Algorithm

Why performance analysis?

There are many important things that should be taken care of, like user-friendliness, modularity, security, maintainability, etc. Why worry about performance? The answer to this is simple, we can have all the above things only if we have performance. So performance is like currency through which we can buy all the above things.

The algorithm’s performance can be measured in two ways:

Time Complexity

The amount of time required to complete an algorithm’s execution is called time complexity. The time complexity is calculated primarily by counting the number of steps required to complete the execution.

Space Complexity

The amount of space an algorithm requires to solve a problem and produce an output is called its space complexity.

- The space is required for an algorithm for the following reasons:

- To store program instructions.

- To store track of constant values.

- To store track of variable values. To store track of function calls, jumping statements, and so on.

Asymptotic Notation

Asymptotic Notation is used to describe the running time of an algorithm – how much time an algorithm takes with a given input, N. There are three different notations:

- Big Oh Notation, Ο: For the worst case running time.

- Omega Notation, Ω: For the best case running time.

- Theta Notation, θ: Used when the running time is the same for all cases.

5 Algorithmic Common Runtimes

The common algorithmic runtimes from fastest to slowest are:

- constant: O(1)

- logarithmic: O(log N)

- linear: O(N)

- polynomial: O(N2)

- exponential: O(2N) factorial: O(N!)

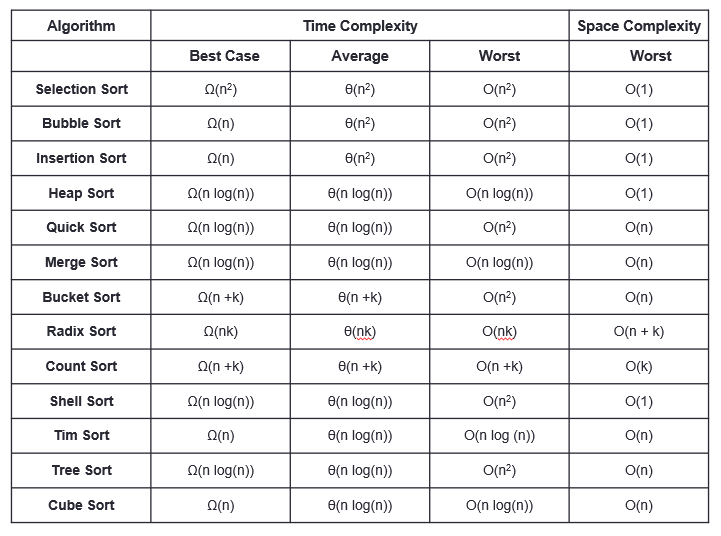

Time and Space Complexity of Different Algorithms

Big-Oh Notation, O

The Big-Oh notation describes the worst-case running time of a program. We compute the Big-Oh of an algorithm by counting how many iterations an algorithm will take in the worst-case scenario with an input of N.

Big-Oh notation represents the upper bound of the running time of an algorithm. Thus, it gives the worst-case complexity of an algorithm.

We typically consult the Big-Oh because we must always plan for the worst case.

For example, O(N) describes the Big-Oh of a linear search algorithm.

Omega Notation, Ω

The Omega describes the best running time of a program. We compute the Omega by counting how many iterations an algorithm will take in the best-case scenario based on an input of N.

Omega notation represents the lower bound of the running time of an algorithm. Thus, it provides the best case complexity of an algorithm.

For example, Ω(1) describes the Omega notation of a linear search algorithm.

Theta Notation, θ

The Theta notation encloses the function from above and below. Since it represents the upper and the lower bound of the running time of an algorithm, it is used for analyzing the average-case complexity of an algorithm.

For example, θ(N) describes the Theta of a linear search algorithm.

Simple Example

You need to do a work m hours each day for n days. Write a block of code using for loop to represent this scenario.

- for(day=1; day<n; day++) ———–n times

- {

- for(hour=1; hour<m; hour++) ——–m times

- {

- do the work;

- }

- }

- How much hour do you need to work? Answer is n×m hours. This is the complexity.

- So, complexity=n*m

- If you need to do the work 5 hours a day for 7 days long then n=7, m=5 time complexity:

- 1 day ——- 5 hour 7 day ——– 5×7 hours= 35 hours

How to calculate Time Complexity?

For an algorithm, if the input size is n, then f(n) is a function of n that denotes the time complexity of that algorithm.

Calculating the value of f(n) for smaller programs is easy but for bigger programs, it’s not that easy. We can compare the algorithms by comparing their f(n) values. We will find the growth rate of f(n). Because one algorithm may give better performance than other algorithms for a smaller input size but not for the larger input sizes. Now, how to find f(n).

Let’s look at a simple example.

- f(n) = 5n2 + 6n + 12 is the time complexity of an algorithm which depends on the size of the input, n.

- When n=1,

- 5n2 = 5×12 = 5,

- 6n = 6×1= 6 and

- 5n2 + 6n + 12 = (5×12)+(6×1)+12 = 5+6+12 = 23

- Now,

- % of running time due to 5n2 = (5÷23) × 100 = 21.74%

- % of running time due to 6n = (6÷23) × 100 = 26.09%

- % of running time due to 12 = (12÷23) × 100 = 52.17%

For n=1, minimum units of time (21.74%) are taken by 5n2 and maximum units of time (52.17%) are taken by 12. But along with the growth of n the dominance of 5n2 will increase.

Let’s assume the different values of n to find the growth rate of f(n).

| n | 5n2 | 6n | 12 |

| 1 | 21.74% | 26.09% | 52.17% |

| 10 | 87.41% | 10.49% | 2.09% |

| 100 | 98.79% | 1.19% | 0.02% |

| 1000 | 99.88% | 0.12% | 0.0002% |

As we can observe in the above table that with the increase in the value of n, the running time of 5n2 increases while the running time of 6n and 12 also decreases. Therefore, it is observed that for larger values of n, the squared term consumes almost 99% of the time. As the n2 term is contributing most of the time, so we can eliminate the rest two terms.

Therefore, f(n) = 5n2

Here, we are getting the approximate time complexity whose result is very close to the actual result. This approximate measure of time complexity is known as an asymptotic complexity. Here, we are not calculating the exact running time, we are eliminating the unnecessary terms, and we are just considering the term which is taking most of the time.

This function never grows faster than this upper bound (Big-Oh Notation, O) and never grows slower than lower bound (Omega Notation, Ω).

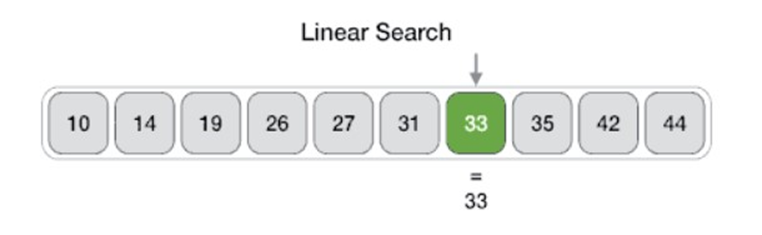

Linear Search

Linear search is also called as sequential search algorithm. It is the simplest searching algorithm. In Linear search, we simply traverse the list completely and match each element of the list with the item whose location is to be found. If the match is found, then the location of the item is returned; otherwise, the algorithm returns NULL.

It is widely used to search an element from the unordered list, i.e., the list in which items are not sorted. The worst-case time complexity of linear search is O(n).

Algorithm: Linear Search ( Array A, Value x)

- Step 1: Set i to 1

- Step 2: if i > n then go to step 7

- Step 3: if A[i] = x then go to step 6

- Step 4: Set i to i + 1

- Step 5: Go to Step 2

- Step 6: Print Element x Found at index i and go to step 8 Step 7: Print element not found

- Step 8: Exit

Example of Time Complexity in Linear Search Algorithm

Array=[34,54,12,67,23,59,60]

Number of elements in Array, N=7

Using Linear Search Algorithm:

| Search Item | No. of Steps | Case | Notation | Time Complexity |

| 60 | 7 | Worst Case | Big-Oh | O(N) |

| 34 | 1 | Best Case | Omega | Ω(1) |

| 67 | 4 | Average Case | Theta | θ(N) |

The space complexity of linear search is O(1)

Advantages and Drawbacks

Advantages of Linear Search:

- Linear search is simple to implement and easy to understand.

- Linear search can be used irrespective of whether the array is sorted or not. It can be used on arrays of any data type.

- Does not require any additional memory.

- It is a well suited algorithm for small datasets.

Drawbacks of Linear Search:

- Linear search has a time complexity of O(n), which in turn makes it slow for large datasets.

- Not suitable for large array.

- Linear search can be less efficient than other algorithms, such as hash tables.

Binary Search of Algorithms

Binary search is the search technique that works efficiently on sorted lists. Hence, to search an element into some list using the binary search technique, we must ensure that the list is sorted. Binary search follows the divide and conquer approach in which the list is divided into two halves, and the item is compared with the middle element of the list. If the match is found then, the location of the middle element is returned. Otherwise, we search into either of the halves depending upon the result produced through the match.

Algorithm: Binary_Search(a, lower_bound, upper_bound, val)

Step 1: set beg = lower_bound, end = upper_bound, pos = – 1

Step 2: repeat steps 3 and 4 while beg <=end

Step 3: set mid = (beg + end)/2

Step 4: if a[mid] = val

set pos = mid

print pos

go to step 6

else if a[mid] > val

set end = mid – 1

else

set beg = mid + 1

[end of if]

[end of loop]

Step 5: if pos = -1

print “value is not present in the array”

[end of if]

Step 6: exit

Example:

The array in which searching is to be performed is:

Let x = 4 be the element to be searched.

Set two pointers low and high at the lowest and the highest positions respectively.

Find the middle element mid of the array

ie. arr[(low + high)/2] = 6.

•If x == mid, then return mid. Else, compare the element to be searched with mid.

•If x > mid, compare x with the middle element of the elements on the right side of mid. This is done by setting low = mid + 1.

•Else, compare x with the middle element of the elements on the left side of mid. This is done by setting high = mid – 1.

•Repeat steps 3 to 6 until low meets high.

•x = 4 is found.

Mastering Algorithms: Unleashing the Positive Potential of Digital Logic

Alogrithms-Positive Aspects:

- Efficiency: Algorithms are designed to perform tasks quickly and accurately, which can save time and resources in various applications. They enable computers to process large amounts of data efficiently.

- Consistency: Algorithms provide a consistent and reliable approach to solving problems. When the same inputs are provided, algorithms produce the same outputs, ensuring consistency in processes.

- Automation: Algorithms can automate repetitive and time-consuming tasks, reducing the need for manual intervention. This can increase productivity and reduce human errors.

- Problem Solving: Algorithms are essential for problem-solving across various domains, from science and engineering to finance and healthcare. They enable complex problems to be tackled methodically.

- Innovation: Algorithms are at the core of technological innovations, such as artificial intelligence, machine learning, and data analysis. They enable the development of new and advanced technologies.

- Scalability: Algorithms can often be scaled up to handle larger datasets or more complex tasks without a proportional increase in resources, making them versatile in various contexts.

Algorithms-Negative Aspects:

- Bias: Algorithms can inherit and perpetuate biases present in the data they are trained on. This can lead to unfair or discriminatory outcomes, especially in applications like machine learning and AI.

- Privacy Concerns: Some algorithms, particularly in data mining and surveillance, can infringe on individuals’ privacy by collecting and analyzing personal data without consent.

- Transparency and Accountability: Complex algorithms can be difficult to understand, leading to a lack of transparency and accountability. When things go wrong, it may be challenging to identify the cause and assign responsibility.

- Algorithmic Trading Risks: Algorithms in financial markets can lead to rapid and unpredictable market fluctuations. Flash crashes and market manipulation are concerns associated with algorithmic trading.

- Job Displacement: Automation driven by algorithms can lead to job displacement in certain industries. While it can increase efficiency, it may also result in unemployment and economic disparities.

- Overreliance: Excessive reliance on algorithms for decision-making can reduce human critical thinking and creativity. Blind trust in algorithms may lead to poor decisions in some cases.

Conclusion:

Algorithms are fundamental to the field of computer science and play a pivotal role in solving a wide range of computational problems efficiently and effectively. They are step-by-step instructions that guide the execution of tasks and processes, providing a systematic and structured approach to problem-solving. The study and design of algorithms are essential for developing software, optimizing operations, and making informed decisions in various domains.

Algorithms come in many forms, from simple and straightforward to complex and sophisticated, and they can be categorized based on their purpose and characteristics. Some of the key categories include sorting algorithms, searching algorithms, optimization algorithms, and machine learning algorithms.

Efficiency is a critical factor in algorithm design, and analyzing the time and space complexity of algorithms helps in evaluating their performance. Big O notation and other complexity analysis tools are commonly used to quantify and compare the efficiency of different algorithms. Choosing the right algorithm for a specific task is often a balance between time and space trade-offs, and the choice can significantly impact the performance of a system.

Algorithms are applied in various fields, from data science and artificial intelligence to network routing and cryptography. They enable us to tackle real-world problems, make data-driven decisions, and create innovative technologies that drive progress in our digital world.

In summary, algorithms are the building blocks of computational problem-solving and are essential in our modern technological landscape. Understanding their principles, designing efficient algorithms, and applying them effectively are core skills for computer scientists and software engineers, enabling them to create more powerful, efficient, and intelligent systems. The study of algorithms continues to evolve as technology advances, and it remains a dynamic and exciting field at the heart of computer science and innovation. Visit R.P.Tech to learn more about technology

Leave A Comment